Modeling in dbt vs modeling in Holistics

“I’m currently doing my modeling in dbt. I see that Holistics also has a modeling layer. When should I model my data in dbt vs when should I do it in Holistics?”

What is dbt, what is Holistics?

dbt lets you write transformation code in SQL to turn raw data into transformed data that are ready for downstream consumption. Holistics occupies a different place - the BI layer, which helps organizations answer business questions and arrive at insights.

However, both dbt and Holistics support modeling. So what is the difference between them?

What is Modeling?

First, what is modeling? Modeling is ultimately about encoding business logic and context into data.

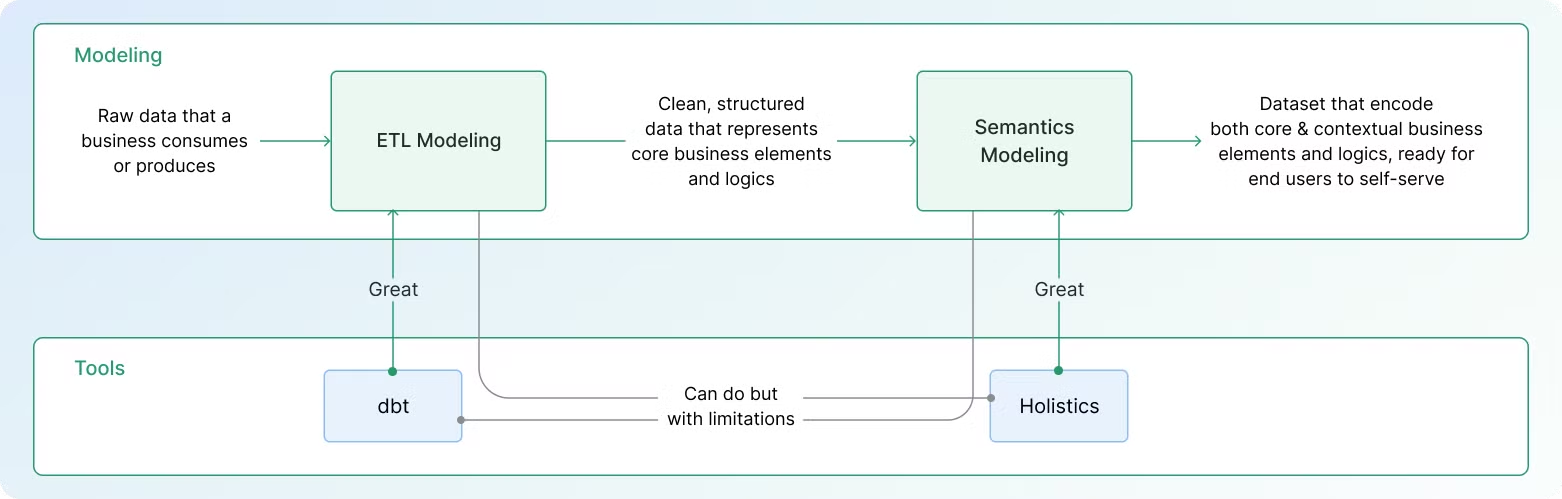

There are two important steps in Modeling:

- ETL Modeling: Representing the core business elements and logic within raw data, translating them into structured data formats.

- Semantics Modeling: Encoding contextual business elements and logics as relationships and formulas, serving them as user-facing datasets that can be self-serviced.

So back to the topic at hand: modeling in dbt vs modeling in Holistics:

- dbt is great at transforming raw data into cleaned and structured data. dbt lets you write transformation code to ensure that data represents accurate business entities. It helps data teams kickstart the modeling process and produces outputs for BI tools to make use of.

- Holistics is great at embedding contextual business elements and logic into self-service datasets. Holistics lets you define dynamic relationships between business entities and quickly create additional business formulas as needed. It helps data teams take what dbt produces and completes the modeling process, enabling companies to make data-driven decisions.

When should you model in dbt versus in Holistics? It’s not a this versus that situation. You should use dbt and Holistics together to do modeling: use dbt to clean and provide structures to data, then use Holistics to define relationships and business logic within data.

TLDR: When to model in Holistics and when to model in dbt?

You should use dbt to transform raw data into structured data and use Holistics to turn these structured data into models that are meaningful to your business.

| Scenario | When to Model in dbt | When to Model in Holistics |

|---|---|---|

| Transforming raw data into cleaned data | dbt excels at transforming raw data into clean, structured data by writing SQL transformations to handle data type casting, filtering, and standardization. | Holistics does offer features to transform and clean data. But it's not as powerful as dbt. |

| Creating fact and dimension tables | dbt is great for transforming data into fact and dimension tables, providing a solid foundation for further analysis. | Holistics does offer features to transform data into fact and dimension tables. But it's not as powerful as dbt. |

| Calculating simple metrics dynamically | dbt can calculate simple metrics, but it has limitations: metrics are tied to specific time grains and require separate models for each combination of metrics/dimensions. | Holistics is better for calculating metrics dynamically without being tied to specific time grains, making it more flexible and easier to manage. |

| Calculating complex metrics (e.g., cohort analysis) | dbt struggles with complex metrics due to the need for intricate SQL logic, rigid time grain adjustments, and rewriting SQL for new dimensions. | Holistics excels in handling complex metrics with its high-level, declarative AQL syntax, allowing for dynamic adjustments and reusable metric definitions. |

A Practical Example

Let's consider a hypothetical e-commerce company and see how dbt handles increasingly complex analytics scenarios.

Transforming raw data into cleaned data - dbt excels here

Your business allows consumers to place orders over an e-commerce website. The raw data contain all the necessary information about the orders but with inconsistent formats and unstandardized values. You want your data to represent e-commerce orders that include an Order ID, who made the order, on what date, and whether the order has been completed successfully or not.

-- models/raw_orders.sql

SELECT

id as order_id,

user_id as customer_id,

CAST(created_at AS DATE) as order_date,

status,

CAST(total_amount AS DECIMAL(10,2)) as order_amount

FROM {{ source('raw_data', 'orders') }}

WHERE status != 'test'

-- models/cleaned_orders.sql

SELECT

order_id,

customer_id,

order_date,

CASE

WHEN status IN ('completed', 'shipped') THEN 'successful'

WHEN status IN ('cancelled', 'refunded') THEN 'unsuccessful'

ELSE 'pending'

END as order_status,

order_amount

FROM {{ ref('raw_orders') }}

Here, dbt effectively cleans and structurizes the raw order data, handling data type casting, filtering out test orders, and standardizing order statuses.

Giving factual and dimensional structures to data - dbt still performs pretty well

After cleaning data, you want to transform them into dimensions and facts so that you can separate between business entities (a product) and business events (a transaction).

-- models/dim_customers.sql

SELECT

customer_id,

first_name,

last_name,

email,

DATE(created_at) as registration_date

FROM {{ source('raw_data', 'users') }}

-- models/fact_orders.sql

SELECT

o.order_id,

o.customer_id,

o.order_date,

o.order_status,

o.order_amount,

p.product_id,

p.quantity,

p.price as unit_price,

p.quantity * p.price as line_item_total

FROM {{ ref('cleaned_orders') }} o

JOIN {{ source('raw_data', 'order_products') }} p

ON o.order_id = p.order_id

dbt is still pretty great for transforming data into dimension and fact tables that can be easily joined for analysis.

Calculating (fairly simple) metrics dynamically - dbt starts to show limitations

Can we write transformation code in dbt to calculate metrics? Definitely. Let’s see what happens when we use dbt to handle this task:

-- models/daily_order_metrics.sql

SELECT

order_date,

COUNT(DISTINCT order_id) as total_orders,

COUNT(DISTINCT customer_id) as unique_customers,

SUM(order_amount) as total_revenue,

AVG(order_amount) as average_order_value

FROM {{ ref('fact_orders') }}

GROUP BY order_date

-- models/product_revenue.sql

SELECT

p.product_category,

SUM(o.order_amount) AS total_revenue,

SUM(o.order_amount) / COUNT(DISTINCT o.order_id) AS average_order_value

FROM {{ ref('fact_orders') }} o

JOIN {{ ref('dim_products') }} p ON o.product_id = p.product_id

GROUP BY p.product_category

-- models/customer_revenue.sql

SELECT

c.customer_segment,

SUM(o.order_amount) AS total_revenue,

SUM(o.order_amount) / COUNT(DISTINCT o.order_id) AS average_order_value

FROM {{ ref('fact_orders') }} o

JOIN {{ ref('dim_customers') }} c ON o.customer_id = c.customer_id

GROUP BY c.customer_segment

While dbt can calculate these metrics, we're starting to see some issues:

- Metrics are tied to specific time grains (daily, monthly). What if you want to calculate weekly order metric, quarterly order metric and annual order metric? You’d have to write separate transformations to produce these metrics. You cannot dynamically change between time grains without pre-aggregating them at all these levels.

- Each new combination of metrics and dimensions requires a new model. You need a separate model for each dimension you want to analyze (product, customer segment, region). If you want to analyze revenue by a new dimension, you need to create a new model. Combining dimensions (e.g., revenue by product and region) would require yet another model. This approach leads to a proliferation of models, making maintenance challenging (did you know that thousands of models are the norms for dbt users).

- Reusing metric definitions across models is difficult. If you need to change the definition of a metric (e.g., exclude certain types of orders from revenue calculation), you need to update it in multiple places. This repetition can lead to inconsistencies if updates are not applied uniformly across all models. There's no central place to manage and version these metric definitions.

Calculating complex metrics - dbt clearly struggles

Let's stretch dbt further and use it to calculate cohort retention - a reasonably common metric that companies tend to care about:

-- models/cohort_retention.sql

WITH cohort_orders AS (

SELECT

customer_id,

DATE_TRUNC('month', MIN(order_date) OVER (PARTITION BY customer_id)) as cohort_month,

DATE_TRUNC('month', order_date) as order_month

FROM {{ ref('fact_orders') }}

),

cohort_size AS (

SELECT cohort_month, COUNT(DISTINCT customer_id) as cohort_customers

FROM cohort_orders

GROUP BY cohort_month

),

cohort_retention AS (

SELECT

cohort_month,

order_month,

COUNT(DISTINCT customer_id) as retained_customers

FROM cohort_orders

GROUP BY cohort_month, order_month

)

SELECT

cr.cohort_month,

cr.order_month,

cs.cohort_customers,

cr.retained_customers,

cr.retained_customers::FLOAT / cs.cohort_customers as retention_rate

FROM cohort_retention cr

JOIN cohort_size cs ON cr.cohort_month = cs.cohort_month

ORDER BY cr.cohort_month, cr.order_month

Here, we see several issues:

- Metrics are not easily reusable or adaptable to different time grains or dimensions. The cohort analysis above is based on a monthly time grain. If we wanted to adjust this to weekly or daily cohorts, we would need to write new SQL transformations or heavily modify the existing logic. This rigidity makes it difficult to dynamically explore different time grains without significant effort.

- Adding new dimensions requires rewriting SQL. If we want to perform cohort analysis by a different dimension (e.g., region or product category), we would have to modify the SQL significantly. This lack of flexibility makes it hard to iterate and experiment with different analyses. The SQL queries become highly coupled to specific data structures, and the effort to refactor them grows exponentially with each added dimension.

- Changes in metric definitions lead to multiple updates across models. If the definition of cohort retention changes (e.g., including only certain types of customers or orders), the SQL must be updated in multiple places. This requirement for repeated updates introduces the risk of inconsistencies and bugs, as changes might be applied incorrectly or overlooked in some models.

While dbt can technically handle complex metric calculations, it is not well-suited for calculating complex metrics that are used in different situations. The limitations in handling complex metrics stem from its reliance on static SQL transformation code, which lacks the flexibility, manageability, and adaptability needed for more sophisticated analytical use cases.

How would Holistics handle Complex Metrics Modeling?

We have talked about how dbt is not the right tool for calculating complex metrics. So what’s the alternative? At Holistics, we believe we have the answer to this problem.

Here is how you would use Holistics to perform Cohort Retention Analysis

Here’s the code setup:

Dataset e_commerce {

// Define a dimension that represents when each user makes their order

dimension acquisition_month_cohort {

model: users

type: 'date'

label: 'Acquisition Month Cohort'

definition: @aql min(orders.created_at | month()) | dimensionalize(users.id);;

}

// Calculate the Total Users metric

metric total_users {

label: "Total Users"

type: "number"

definition: @aql count(users.id);;

}

// Define a dimension that shows the month number

dimension month_no {

model: orders

label: 'Month Number'

type: 'number'

definition: @aql date_diff('month', orders.cohort_month, orders.created_at | month());;

}

// Define retention: how many users of the cohort are still active in consecutive months

metric retention {

label: 'Retention'

type: 'number'

definition: @aql (total_users*1.0) / (total_users | of_all(orders.month_no));;

}

}

What are the advantages compared to dbt when using Holistics to perform complex modeling?

Dynamic Metric Definitions without Complex SQL. Unlike dbt, which requires writing intricate SQL for complex metrics, Holistics comes with Analytics Querying Language (AQL short) that allows users to define metrics and dimensions using a high-level, declarative syntax. This approach abstracts away the complexity of the underlying SQL, allowing users to focus on business logic rather than technical implementation details. For example, the definition of the acquisition_month_cohort dimension in AQL uses a simple expression to compute the cohort month based on the user acquisition date.

dimension acquisition_month_cohort {

model: users

type: 'date'

label: 'Acquisition Month Cohort'

definition: @aql min(orders.created_at | month()) | dimensionalize(users.id);;

}

This code snippet defines a dimension dynamically without needing subqueries or window functions, simplifying the process of calculating cohort metrics.

Adaptability to Different Time Grains and Dimensions: AQL makes it easy to adapt metrics to different time grains and dimensions. For instance, the month_no dimension is defined to calculate the difference in months between a user’s cohort month and the order date.

dimension month_no {

model: orders

label: 'Month Number'

type: 'number'

definition: @aql date_diff('month', orders.cohort_month, orders.created_at | month());;

}

This allows analysts to dynamically adjust the cohort analysis to any time grain (e.g., daily, weekly) by changing the function or parameters in a centralized definition. There is no need to rewrite complex SQL queries for each different grain or dimension, as the dimensions and metrics are centrally defined and managed

Reusable Metric Definitions across Different Contexts:

AQL facilitates the reuse of metric definitions across different contexts without requiring redundant code. For example, the total_users metric is defined once:

metric total_users {

label: "Total Users"

type: "number"

definition: @aql count(users.id);;

}

This definition can be reused in multiple analyses (e.g., retention, engagement, LTV) without duplicating the logic. If the definition of "Total Users" needs to change (e.g., to exclude certain types of users), it is updated in one place, and all dependent metrics are automatically updated. This prevents the proliferation of model definitions and reduces the maintenance burden.