Connect to Databricks

Introduction

This documentation provides a comprehensive guide on connecting Databricks to Holistics as a data source. In the following sections, we will walk you through the necessary steps:

- Creating a personal access token and granting permissions for Holistics to interact with Databricks.

- Establishing the connection between Databricks and Holistics as a data source.

Create Databricks personal access token for Holistics

-

Create a personal access token for a Databricks user following the Databricks Personal Access Tokens Documentation

-

Assign specific privileges to your

DATABRICKS_USERNAMEas outlined below:

-

Grant USE CATALOG privilege on your catalog. This permits the use of a catalog. Refer to the Catalogs Guide for more information.

-

Grant USE SCHEMA privilege on your schema. This permits the use of a schema. Find additional details in the Schemas Guide.

-

Grant SELECT privilege on the tables, it is required for users to query a table. Refer to the Tables Guide for more information.

For further insights, we recommend reading about Databricks permission.

Obtain Databricks Connection Details

Obtain these details by following Databricks Doc.

You will need these details when creating a Databricks data source below.

Connect Databricks to Holistics as a data source

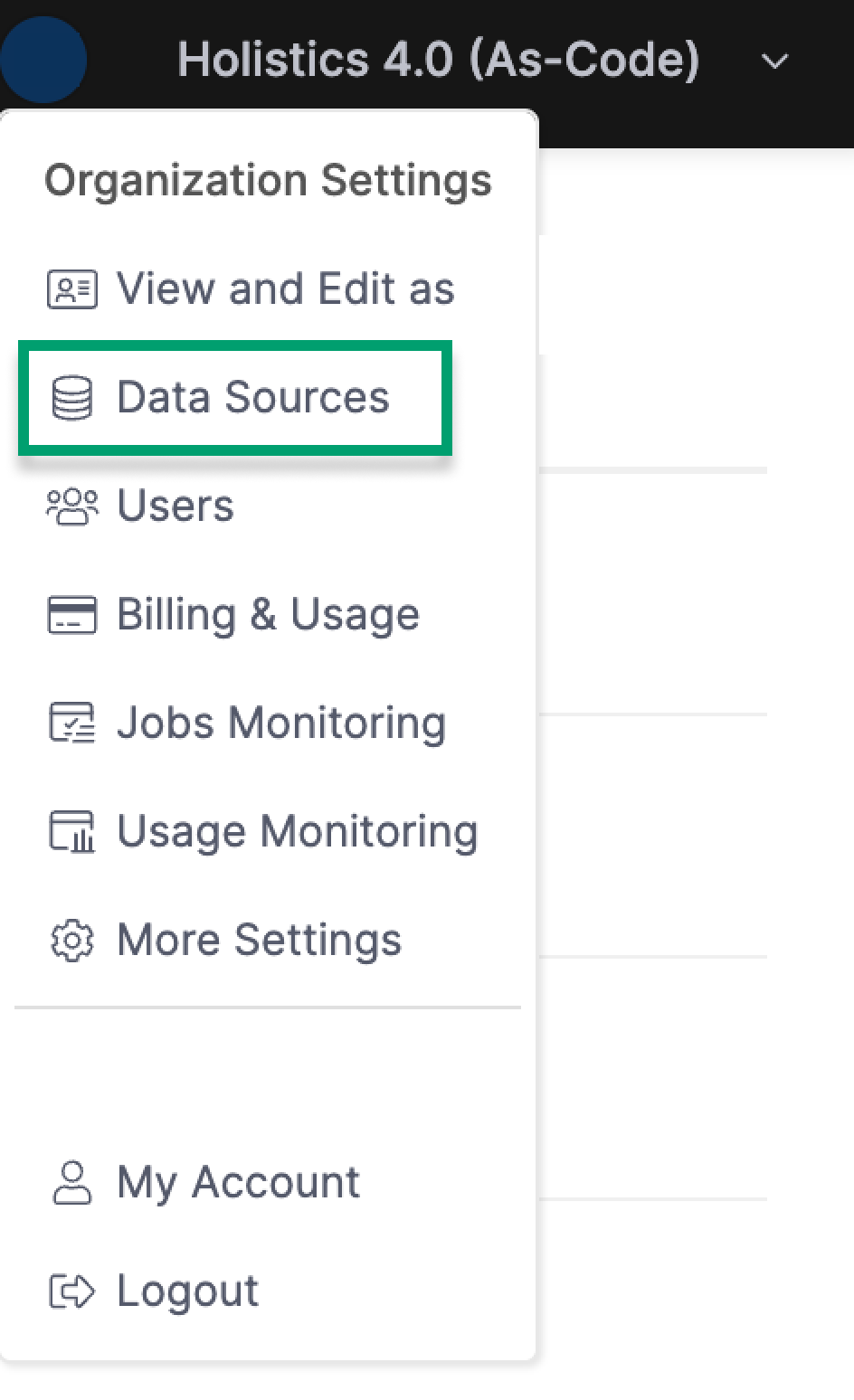

From the Holistics app's header bar, open Organization Settings menu -> Data Sources.

Click New Data Source button and fill in the details:

- Database Type: Select Databricks.

- Display Name: Provide a name for your connection.

- Host: Databricks Server hostname (from Databricks Connection Details)

- Port: Databricks Port (from Databricks Connection Details)

- Username: Databricks username of the dedicated Holistics user (created above).

- NOTE: If you are using a Personal Access Token, input the text

tokenin this field.

- NOTE: If you are using a Personal Access Token, input the text

- Password: Databricks Personal Access Token or password of the dedicated Holistics user (created above).

- HTTP Path: Databricks HTTP path (from Databricks Connection Details)

- Catalog: The Databricks Catalog that you want to connect Holistics to.

Click on Test connection to ensure the connection works, and then Save to complete the process.

Now you can start using your Databricks data source within Holistics.

FAQs

Does Holistics support legacy Hive metastore?

While Holistics can execute queries on a data warehouse in a legacy Hive metastore, it does not provide support for fetching the database schema from it.

Suggested Solutions/Workarounds:

- Databricks recommends migrating tables managed by the Hive metastore to the Unity Catalog metastore. Guidelines for this process can be found here.

- Although fetching the database schema is not possible, users can construct the modeling by creating Query Models. However, creating Table Models will not be feasible without the database schema.

How to query tables from multiple catalogs in Databricks?

Databricks supports querying across multiple catalogs using a three-level namespace that includes the catalog, schema, and table. However, Holistics Modeling primarily supports the schema and table levels, making direct querying of tables from multiple databases currently impossible.

Workaround:

Assuming your current catalog (in DataSource connection form) is catalog1, and you want to join with data in catalog2, create a Query Model that refers to tables in Catalog2. Create a Query Model instead of a 'table model' to link to your database table:

Model catalog2 {

type: 'query'

data_source_name: 'databricks'

dimension field1 {

type: 'number'

}

...

query: @sql select * from catalog2.public.users ;;

}

This model fetches data from catalog2.public.users (Catalog2) rather than the current Catalog1. You can treat this model like a standard table model and create relationships to connect it with other models in Catalog1.

Note: Ensure you have USE CATALOG permission on all catalogs.

Why does my query/report occasionally take a very long time to respond?

There are some common reasons:

- Databricks has a feature to terminate the database/cluster after it goes idle. After termination, a new query may take a few minutes to restart the database before execution.html#cluster-autostart-for-jobs-and-jdbcodbc-queries) the database first before actually getting executed.

- You can also check out Performance Troubleshooting documentation.